Next-Generation AI: Overcoming LLMs Limitations

Large Language Models (LLMs) automate content creation, improve efficiency, and attract major investments—yet still grapple with issues of bias, hallucinations, and limited reasoning abilities. To address these shortcomings, the next wave of Next-Generation AI is emerging, offering enhanced accuracy, deeper contextual understanding, and more efficient learning.

In this article, we explore how Next-gen AI is advancing beyond traditional LLMs by integrating innovative technologies such as:

- Retrieval-Augmented Generation (RAG): Pulls information from external sources to improve accuracy.

- Multimodal Models: Process text, images, and other data types together for broader functionality.

- Hybrid Neural-Symbolic Systems: Combine deep learning with structured reasoning for better decision-making.

- Quantum Computing: Speeds up complex calculations, potentially transforming AI performance.

- Neuromorphic Chips: Mimic the brain’s efficiency to improve processing power and adaptability.

- Open-Source AI: Encourages collaboration and innovation, driving faster improvements.

These breakthroughs could help AI overcome current challenges and achieve deeper understanding.

1. Beyond Pattern Recognition: Evolving Toward Next-Generation AI

Simply scaling up parameters does not fully solve hallucinations, bias, or contextual reasoning. Researchers are pushing on complementary fronts:

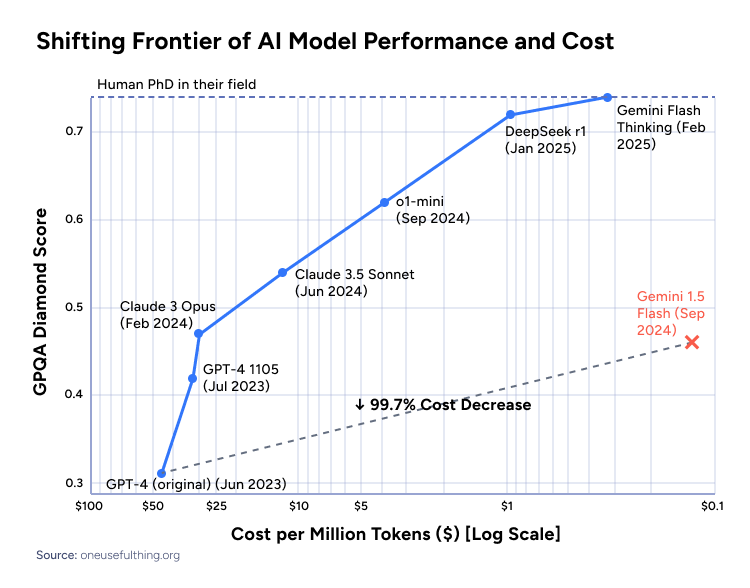

New Generation of AI: Rapid Advancements & Cost Reduction

The pace of innovation in Large Language Models (LLMs) has been staggering, with each new generation improving both performance and affordability. The chart below highlights this shift, showcasing how models such as Claude 3, GPT-4, and DeepSeek have made significant strides in reasoning ability, while the cost per million tokens has dropped dramatically.

As shown in the graph:

- Lower Costs, Wider Adoption: As generative AI becomes more affordable, smaller enterprises can access frontier capabilities once limited to big tech.

- Approaching Human Expertise: Models like Claude 3 and Gemini Flash show near-expert performance on specialized tasks, sometimes rivaling domain experts.

1.1 Retrieval-Augmented Generation (RAG)

Instead of relying purely on static training data, LLMs can query external databases or web sources on the fly. This helps reduce hallucinations and keep responses up to date.

For instance, Google DeepMind’s “self-RAG” approach has models that reflect on the quality of retrieved content and iteratively refine queries, minimizing hallucinations in complex tasks.

1.2 Integrating Knowledge Graphs

When an LLM is paired with a knowledge graph-structured data of concepts and relationships—it can verify its outputs against a source of truth. Early studies show that prompting LLMs with relevant graph info significantly improves factual accuracy and reduces nonsense answers.

1.3 Multimodal Models

Humans combine visual, auditory, and textual information seamlessly. Next-gen AI systems do the same:

- CLIP, Flamingo, GPT-4V

These models use contrastive learning to align text and images in a shared semantic space, enabling visual reasoning. - GPT-4o and Gemini 1.5 Pro

Emerging variants from OpenAI and Google go further, processing video, audio, or sensor data for real-time industrial IoT diagnostics and advanced robotics.

1.4 Hybrid AI (Neural + Symbolic)

Purely data-driven neural networks often struggle with logical reasoning or interpretability. Hybrid AI unites LLMs with a symbolic engine that applies explicit rules or logic. This approach can help ensure outputs follow consistent reasoning steps—and offer explainability when needed.

Key Benefits of Hybrid AI:

- Symbolic Logic for Governance

Symbolic AI allows developers to encode known rules, constraints, or regulatory guidelines in structured form. The symbolic engine can then validate or override an LLM’s output when it conflicts with established logic or compliance requirements. - Dynamic Planning and Multi-Step Reasoning

Neural networks excel at pattern recognition but often falter in chaining multiple steps of logic. By delegating multi-step planning to a symbolic module, the system can more reliably handle complex tasks and avoid illogical “hallucinated” sequences. - Explainability and Transparency

Combining a black-box neural net with a rule-based system adds a layer of interpretability. When an output is challenged, organizations can point to the symbolic engine’s “reasoning rules” to clarify how final answers were derived. - Case Study: Autonomous Vehicles

Many self-driving systems blend neural perception (e.g., recognizing pedestrians and traffic signals) with symbolic decision-making (stopping at a red light, obeying local laws). This synergy balances the adaptability of deep learning with the rigor of rule-based logic.

1.5 Human-Like Learning Efficiency

Current LLMs require massive datasets and extensive training. Researchers like Yann LeCun propose “objective-driven AI” (e.g., Joint Embedding Predictive Architecture, JEPA) where AI learns through observation, prediction, and experimentation—similar to how humans gain common sense. Such methods could let models update themselves without frequent, full-scale retraining.

How Could This Look in Practice?

- Meta-Learning and Few-Shot Approaches

Modern “few-shot” or “meta-learning” techniques enable a model to generalize from minimal examples, mimicking how humans learn quickly from limited data. - Continuous Updating

Instead of retraining the entire model, systems can refine parts of their internal state (like a “working memory”) to adapt to new information as it arrives. - Reduced Training Costs

Training large LLMs from scratch is resource-intensive. More efficient, incremental learning cuts down on hardware and energy expenses. - Stronger Common Sense

By training in a manner closer to human developmental learning (observing the environment, making predictions, testing hypotheses), models may develop more robust “common sense” reasoning over time.

1.6 LLM-Based Autonomous Agents

Beyond integrating knowledge graphs, symbolic logic, or multimodal data, a rapidly emerging frontier is the development of LLM-based autonomous agents—sometimes called “generative agents.” These systems use powerful language models (like GPT-4 or Claude) but add tool usage, long-term memory, and iterative planning loops to solve more complex tasks with minimal human oversight.

Self-Directed Task Execution

In projects such as Auto-GPT and BabyAGI, the LLM not only generates responses but also “plans” and “executes” a chain of subtasks, accessing external tools (e.g., web browsers, APIs, or databases) as needed. This can radically expand an AI’s ability to handle lengthy, multi-step objectives that go beyond a single prompt-and-response pattern.

Memory and Context Persistence

While standard LLMs suffer from limited context windows, autonomous agent frameworks provide specialized memory stores—often in vector databases—to retain and retrieve information over time. This supports more “lifelike” continuity, enabling the AI to revisit earlier decisions, learn from mistakes, and incorporate new data without a full model retraining.

Real-World Application

Regarding real-world applications, autonomous AI agents can be applied in multiple functions and industries. In business intelligence, they can autonomously gather and analyze market data, generating real-time insights and recommending actions to managers. Similarly, in software engineering, beyond generating code, they assist with debugging and refactoring by iterating on test results and integrating with tools like GitHub. Furthermore, as virtual assistants, they seamlessly combine knowledge retrieval, event scheduling, and email drafting, streamlining workflows and enhancing productivity.

Challenges & Opportunities

While these agentic systems offer unprecedented automation, they also intensify concerns around alignment, safety, and accountability. Mistakes can compound over multiple self-directed steps, and misguided objectives can lead to unintended outcomes. Research is ongoing to integrate robust guardrails—much like in Hybrid AI—and advanced monitoring to ensure agents act within ethical and regulatory bounds.

Looking Ahead

Experts predict that LLM-based autonomous agents could soon orchestrate complex workflows, collaborating with human teams much like junior “digital employees.” Coupled with breakthroughs in real-time knowledge grounding, agentic LLMs might tackle tasks with fewer constraints and progressively approach more general intelligence—albeit with careful oversight and continuous refinement.

1.7 AI Adoption Challenges: Key Barriers to Overcome

While AI adoption continues to accelerate across industries, organizations face persistent roadblocks that limit full-scale deployment. According to Gartner's research, the top challenges include:

- Enterprise maturity gaps, such as lack of skilled staff and concerns about data scope and governance.

- Fear of the unknown, with uncertainty around AI benefits, security risks, and measurement of ROI.

- Finding a starting point, particularly in identifying clear use cases and securing funding.

- Vendor complexity, including integration challenges and confusion over vendor capabilities.

1.8 Sustainability and Ethical Depth

- Green AI:

Parameter-efficient training (LoRA, QLoRA) and quantized approaches (e.g., Meta’s LLaMA 3) can slash energy usage by up to 70% without severely sacrificing accuracy. - Ethical Frameworks:

Organizations increasingly need to build strong data quality frameworks, for example by adopting guidelines like NIST’s AI Risk Management Framework or the EU’s AI Liability Directive, often paired with tools like BiasBusters AI for real-time bias checks.

2. Forward-Looking Vision on Next-Generation AI

2.1 Quantum-Enhanced AI

Hybrid quantum-classical architectures could tackle combinatorial optimization in logistics or drug discovery. Projects linking IBM’s Qiskit with advanced LLMs are in early research stages.

2.2 Neuromorphic Chips

Intel’s Loihi 2 uses spiking neural networks for ultra-efficient inference, potentially offering a 100× improvement in energy usage—vital for real-time or edge deployments.

2.3 Open-Source Momentum

Communities like Mistral and EleutherAI (with models like Pythia) are challenging proprietary dominance by providing high-quality, transparent code and weights—driving innovation and accountability in the ecosystem.

2.4 Very Recent Developments

- Claude 3 emerges as a strong competitor to GPT-4 on certain tasks, especially coding and creative reasoning.

- Llama 3 and DeepSeek Coder narrow the gap between open-source and proprietary systems.

- New Evaluation Frameworks: Researchers move beyond simple benchmarks, focusing on adversarial testing and chain-of-thought clarity.

- Recursive Self-Improvement: Early experiments let models propose improvements to their own architectures—a glimpse into self-evolving AI.

3. Strategic Recommendations for Business Leaders

- Use LLMs, But Add Guardrails

They can generate immediate value (e.g., drafting content, assisting with coding or queries). In high-stakes workflows—like financial reporting or patient care—employ human review and domain constraints to catch hallucinations or bias. - Combine LLMs With Other Tools

Model orchestration frameworks can switch between specialized LLMs, knowledge graphs, or RAG-based pipelines to maximize accuracy. This approach keeps knowledge current and reduces reliance on static training. - Adopt TinyML and On-Device Solutions

MicroLLMs (e.g., Microsoft’s Phi-3) allow on-device generative AI, avoiding cloud latency and data-transfer costs. This is especially relevant for IoT and edge computing scenarios. - Monitor Ethical Debt

Just as technical debt accumulates if not addressed, “ethical debt” grows when bias, privacy gaps, or regulatory non-compliance remain unresolved. AI debt audits can spot these issues early and protect brand reputation. - Implement GenAI Ops (MLOps 2.0)

Modern AI pipelines should incorporate retrieval-augmented generation steps, symbolic “checkpoints,” and robust logging for interpretability. This ensures end-to-end governance from model training to production. - Train Your Organization

Upskill employees in AI fundamentals. Based on implementation experience across multiple industries, we’ve seen that teams with strong data literacy better harness new AI tools—and spot pitfalls faster. - Stay Flexible and Vendor-Agnostic

The AI landscape evolves rapidly. A vendor-agnostic architecture allows you to swap in new models (multimodal, MoE, or quantum-enhanced) as they mature without significant rework. - Prepare for Regulatory and Ethical Requirements

AI risk management is now table stakes. Tools like BiasBusters AI or NIST’s and EU’s frameworks help you proactively maintain compliance, especially as global regulations tighten.

4. The Road Ahead

Despite their flaws, LLMs have opened our eyes to AI’s transformative potential. Over the next five to ten years, advances in retrieval, knowledge grounding, and multimodal integration could yield systems that genuinely understand context, reason more reliably, and stay current. Hybrid architectures may also enhance explainability, helping enterprises and regulators alike trust AI outputs in sensitive domains.

For individuals aspiring to enter the field, receiving strong AI training is essential to stand out in a competitive job market. Learning how to become an AI engineer typically involves mastering machine learning fundamentals, gaining hands-on experience, and staying updated on the latest research.

In practical terms, we can expect:

- Deeper industry penetration of LLM-based solutions, from manufacturing robots interpreting textual instructions and visual cues to healthcare systems synthesizing patient data.

- Massive private and public capital to keep fueling AI R&D. The race among the U.S., EU, and China to lead in AI will drive investments in computing infrastructure, specialized chips, and next-gen models.

- Convergence of AI with robotics and IoT, as large models integrate with sensors, physical environments, and real-time data for more autonomous operations.

- Tighter regulation requiring transparency, bias control, and consumer protections, spurring new “Responsible AI” products and compliance services.

For business leaders, the imperative is twofold: harness the power of today’s LLMs for immediate gains, and remain agile for tomorrow’s leaps in AI architecture. Those who blend human expertise with advanced AI tools most effectively will define the innovation frontier in the coming decade.

Conclusion

Large Language Models have sparked a generative AI revolution, attracting unprecedented investment and promising productivity gains across sectors. Yet they remain, at the core, statistical word predictors lacking true comprehension. Hallucinations, biases, and static knowledge underscore the need for careful oversight and complementary solutions—like retrieval-augmented generation, hybrid AI, and continuous fine-tuning.

Enterprises adopting LLMs now can secure immediate value—provided they implement robust guardrails, domain integration, and thorough human reviews. Meanwhile, the next generation of AI will likely feature systems that learn efficiently, handle multimodal data seamlessly, and offer transparent reasoning. By understanding current limitations and emerging directions, organizations can future-proof their strategies, leveraging AI as a powerful ally across diverse industries. The journey has only just begun.

on a weekly basis.