Top 5 Digital Trends for 2025 and Beyond

To underline the top 2025 digital trends, companies should understand how some key technologies like AI-powered automation, quantum-secure encryption, and next-gen computing are leading the charge. As these innovations advance, companies must stay ahead to remain competitive.

From Agentic AI transforming enterprise workflows to nuclear-powered AI sustaining the energy-hungry infrastructure of modern data centers, 2025 digital trends highlight the convergence of AI, IoT, edge computing, and sustainability efforts. Meanwhile, breakthroughs in micro LLMs and digital twins are enabling more efficient, scalable, and intelligent systems across industries.

This article explores the top 2025 digital trends, offering key insights into how these technologies will drive innovation, enhance operational efficiency, and shape the future of business.

Digital Trend #1: The Rise of Agentic AI

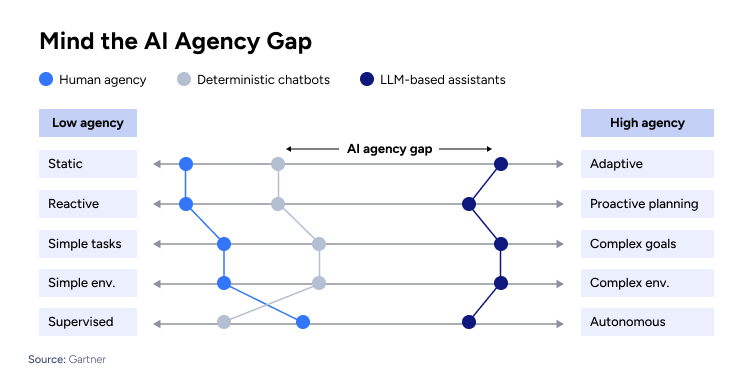

While today’s AI models primarily function based on user prompts, the next evolution—agentic AI—will enable AI systems to plan, act, and make decisions independently. These systems plan, execute, and optimize complex tasks without continuous supervision. By 2028, Gartner projects that 15% of routine work decisions will be handled by AI agents.

How Agentic AI is Changing Business Operations

Unlike generative AI, which creates content, agentic AI focuses on decision-making and action. It identifies goals, generates strategies, and executes them by interacting with digital tools. This means it can automate entire workflows, from managing IT systems to handling customer service escalations:

- AI agents will automate complex workflows and execute technical projects based on natural language instructions.

- Developers will be among the first to benefit, as AI coding assistants become more advanced.

- AI will enhance situational awareness, analyzing massive datasets overnight and identifying key actions for businesses.

Key Use Cases

While many applications of agentic AI are still in the experimental or pilot phase today, emerging use cases are beginning to take shape across various industries and functions. In manufacturing, AI-driven systems predict wear and tear on production equipment, reducing downtime by identifying failures before they happen. In logistics, AI supply chain agents adjust inventory and delivery routes in response to real-time data. AI assistants in healthcare now manage pre-operative procedures, reminding patients about fasting requirements and medications.

- Customer Service: AI agents analyze customer interactions, predict service issues, and offer solutions. AI-powered support agents can autonomously handle queries, reducing response times and operational costs.

- Sales Automation: CRM-integrated AI agents qualify leads, book meetings, and recommend follow-ups. AI-powered sales assistants help teams prioritize high-value opportunities.

- Healthcare Support: AI-powered virtual caregivers track medication schedules and interact with patients. Early trials suggest a reduction in hospital readmissions due to improved patient adherence.

- Manufacturing: AI agents optimize production schedules, lowering costs and increasing efficiency. AI-driven predictive maintenance reduces machine failures and extends equipment lifespan.

Risks and Challenges

The widespread adoption of agentic AI raises concerns about accountability and security. Organizations need governance frameworks to ensure AI decisions align with ethical and regulatory standards. As these systems gain autonomy, businesses must monitor AI actions to prevent unintended consequences.

Agentic AI is still evolving, but its impact on industries is already visible. Companies investing in AI-driven automation must focus on transparency, compliance, and security to maximize benefits while managing risks.

Digital Trend #2: Nuclear-Powered AI

The AI revolution is pushing digital infrastructure to its limits, requiring vast amounts of energy. To sustain the next wave of AI-powered applications, major tech firms are now turning to nuclear power as a reliable and scalable solution. While nuclear power was previously overlooked, it is making a comeback as a clean and controllable energy source. Key developments include:

- Small Modular Reactors (SMRs) offer more flexible, scalable nuclear solutions.

- Advanced Modular Reactors (AMRs) promise higher efficiency and reduced nuclear waste.

- Increased interest in nuclear fusion, a long-term goal for limitless clean energy.

How Nuclear Energy Is Powering the Future of AI and Cloud Computing

In 2024, major tech firms began securing long-term nuclear energy deals:

- Google signed a deal with Kairos Power to purchase 500 megawatts from SMRs, with initial reactors expected by 2030.

- Microsoft partnered with Constellation Energy to revive the Three Mile Island nuclear plant, bringing it back online for the first time in five years.

- Amazon invested in X-energy, which aims to deploy 5 gigawatts of SMR-generated electricity by 2039.

- Meta issued a request for proposals to acquire up to 4 gigawatts of nuclear power for future AI operations.

Why Nuclear?

AI data centers require continuous energy. Nuclear reactors provide round-the-clock power, unlike solar and wind, which depend on weather conditions. Moreover, a single nuclear reactor generates far more power than conventional renewable sources. Small modular reactors produce 80 to 300 megawatts each, with some designs reaching 500 megawatts. This shift supports AI processing, real-time data analysis, and cloud-based workloads without power limitations and with reduced carbon emissions.

Future Prospects

SMRs are still years away from large-scale deployment. Regulatory approvals, safety concerns, and high initial costs remain barriers. The U.S. Department of Energy has committed $12 billion to accelerate nuclear projects, but commercial viability depends on reducing costs and scaling production. However, tech companies’ investments in nuclear energy signal a long-term shift to a nuclear-powered AI infrastructure that, if successful, could redefine how computing power is generated and sustained.

Digital Trend #3: Post-Quantum Cryptography

AI is also reshaping cybersecurity, enabling both more sophisticated AI-powered cyberattacks and advanced AI-driven defenses. According to Capgemini, 97% of organizations have faced security breaches or issues linked to Gen AI in the past year.

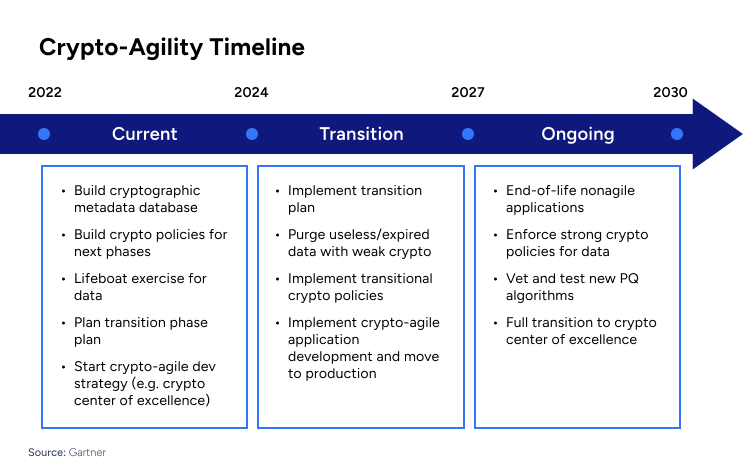

For 2025, 44% of top executives identify Gen AI’s impact on cybersecurity as a leading technology trend, thus organizations are investing in endpoint and network security, automating threat detection using AI-driven intelligence, and strengthening encryption algorithms. In particular, the next major cybersecurity disruption is quantum-computing threats, so there is a growing focus on Post-Quantum Cryptography.

The Quantum Threat to Encryption

Most encryption today relies on mathematical problems that classical computers take years to solve. Quantum computers, however, can use Shor’s algorithm to factor large numbers exponentially faster, rendering RSA, ECC, and other widely used encryption methods obsolete. The U.S. National Institute of Standards and Technology (NIST) has already selected four PQC algorithms to replace vulnerable cryptographic systems, preparing for an era where quantum computing could undermine global cybersecurity.

The Next-Gen Security Landscape

Governments and enterprises are accelerating the shift to PQC:

- Alphabet’s 105-qubit “Willow” processor demonstrating computational power that could soon break traditional encryption.

- IBM’s Condor (1,121 qubit) and other quantum projects indicate that breaking RSA-2048 encryption—once considered secure for decades—may become feasible within the next 5-10 years.

- U.S. Federal Agencies: The National Security Agency (NSA) mandated a transition to PQC for classified communications by 2035.

- Financial Institutions: Banks like JPMorgan Chase and Mastercard are testing quantum-resistant encryption to protect transactions.

However, transitioning to PQC is complex. Unlike traditional encryption updates, PQC requires:

- Algorithm Compatibility: Replacing encryption across networks without disrupting legacy systems.

- Processing Overhead: Some PQC algorithms require more computational resources than current encryption methods.

- Hybrid Security Models: Many organizations are adopting dual-layer encryption, combining classical and post-quantum cryptographic methods until quantum-safe infrastructure is fully operational.

This is why 2025 is the year when CIOs must act. With quantum computing accelerating, NIST finalizing standards, and government mandates kicking in, companies that delay the transition risk exposing their data to quantum-capable adversaries in the coming years.

Digital Trend #4: Micro LLMs

Large language models (LLMs) have grown exponentially in size, with models like MT-NLG at 530 billion parameters and PaLM 2 at 340 billion parameters. However, their high computational costs—training GPT-3 alone cost between $4 million and $10 million—have made them impractical for many enterprises. Micro LLMs, with 100 million to 10 billion parameters, provide an alternative that reduces power consumption and operational costs.

Cost and Resource Efficiency

General-purpose LLMs require extensive computational resources and specialized hardware, increasing costs. Fine-tuned micro LLMs operate on lower power hardware and on-premise servers, cutting cloud dependency. Companies like Microsoft and Meta are developing compact models optimized for on-device AI processing, lowering operational expenses for businesses adopting AI at scale.

Use Cases

Micro LLMs are being deployed in finance, cybersecurity, and customer support. Banks use them for fraud detection and transaction analysis, while healthcare providers use them for medical documentation and diagnostics. JPMorgan Chase is integrating these models to enhance real-time transaction monitoring and compliance checks.

Moreover, large models generate hallucinations—incorrect yet plausible responses—due to their broad training data. Micro LLMs are fine-tuned on domain-specific datasets, improving accuracy in legal, finance, and healthcare applications. They also mitigate data privacy risks, as they can be deployed on private servers, preventing exposure of sensitive enterprise data to third-party AI providers.

To sum up, LLMs are ideal for large-scale, general-purpose tasks, while Micro-LLMs are for specialized, real-time applications that demand low resource consumption. They might struggle with tasks outside their specialization, but they can be deployed on-premises which reduces the risk of data exposure.

Future Developments

AI companies are shifting towards smaller, specialized models. Microsoft’s Phi-3 and Meta’s Llama 3, set for release in 2025, are designed for low-latency, real-time processing on edge devices. This transition will make AI deployment more cost-effective and scalable for industries with strict security and processing requirements.

Digital Trend #5: Digital Twins

Digital twins are virtual replicas of physical assets, systems, or processes that mirror real-world conditions in real time. Their adoption has grown significantly in recent years, particularly in advanced industries, where nearly 75% of companies have implemented some level of digital-twin technology. The global digital twin market is projected to expand at a 60% annual growth rate, reaching $73.5 billion by 2027.

In 2024, major industrial players scaled their digital-twin projects beyond pilot phases. Companies in aerospace, automotive, and energy deployed digital twins to optimize operations and predict failures. Siemens expanded its digital-twin platform, integrating real-time IoT data for predictive maintenance. Tesla continued refining its factory digital twins, enhancing production efficiency. The healthcare sector also saw advancements, with GE Healthcare using digital twins to simulate hospital workflows and reduce patient wait times.

Moving to a Full-System-Level Simulation

In 2025, digital twins are expected to move from individual asset monitoring to full system-level simulations. Manufacturing firms that adopted digital twins in previous years have started reporting 5–7% monthly cost savings in production scheduling. Product developers have cut total development times by 20–50% and reduced quality issues by up to 25%. The next wave of adoption will focus on scaling these benefits across entire production networks.

Applications and Impact

- Manufacturing: Digital twins can predict production bottlenecks more effectively than traditional spreadsheet models. Factories implementing digital twins in 2024 reduced equipment downtime and improved throughput.

- Product Development: Companies using digital twins for R&D accelerated prototyping, reducing product launch times and defect rates.

- Supply Chain Optimization: Leading firms improved logistics efficiency, lowering transportation and labor costs by 10% while increasing customer service reliability by 20%.

- AI Integration: The combination of digital twins and generative AI is enhancing predictive capabilities. In 2024, AI-assisted digital twins enabled real-time optimization in industrial environments. By 2025, this synergy could further automate decision-making, reducing operational inefficiencies.

With enterprise adoption accelerating, 2025 will be a defining year for digital twins, transitioning from isolated use cases to fully integrated operational tools across industries.

Conclusion

The top 2025 digital trends reflect a fundamental shift in how technology integrates with business and society. As AI becomes more autonomous, quantum security threats rise, and new energy solutions emerge, organizations must adapt by investing in AI-driven automation, resilient cybersecurity, and sustainable computing infrastructure.

Industries that embrace digital twins for real-time optimization, leverage micro LLMs for cost-effective AI deployment, and transition to nuclear-powered AI for stable energy solutions will gain a competitive edge in the evolving digital landscape.

Staying ahead of these trends is no longer optional—it’s a necessity for long-term growth, security, and innovation. By strategically aligning with these emerging technologies, businesses can drive transformation, enhance efficiency, and future-proof their operations in an era of unprecedented digital acceleration.

With Consultport, you can have access to qualified AI consultants and digital transformation consultants who will help you navigate the disruptive potential of these technologies for 2025 and beyond.

Want to know more? Find a consultant with Consultport.

on a weekly basis.