What’s a Large Language Model? A Complete LLM Guide

Introduction

Large Language Models (LLMs) like GPT-4, Claude, Gemini, DeepSeek, and Mistral have taken both the tech and business worlds by storm, demonstrating unprecedented capabilities in generating human-like text. Public enthusiasm soared with tools such as ChatGPT, which reached 100 million users within a few months of launch—faster adoption than any prior technology. This excitement stems from LLMs’ potential to automate content creation, answer a wide diversity of questions, and augment workflows at scale.

Yet, beneath the hype lie fundamental shortcomings. Current LLMs use massive text corpora to predict likely word sequences but lack true world understanding, common-sense reasoning, or long-term memory. They also hallucinate—producing plausible-sounding but false or fabricated content—and harbor inherent biases from their training data. As leaders plan their AI strategies, recognizing these limitations and the future trajectory of AI is crucial. This article provides a Guide to LLMs, with a strategic overview of:

- Current shortcomings of LLMs and why they matter for enterprise adoption

- Global investment trends in generative AI, spanning the U.S., Europe, and China

- Industry applications reshaping healthcare, finance, manufacturing, and more

- Emerging AI research (e.g., retrieval-augmented generation, multimodal, hybrid AI, Mixture-of-Experts (MoE) architectures, RLHF 2.0) to overcome LLM weaknesses

- Practical guidance on how businesses can leverage today’s tools while preparing for tomorrow’s breakthroughs

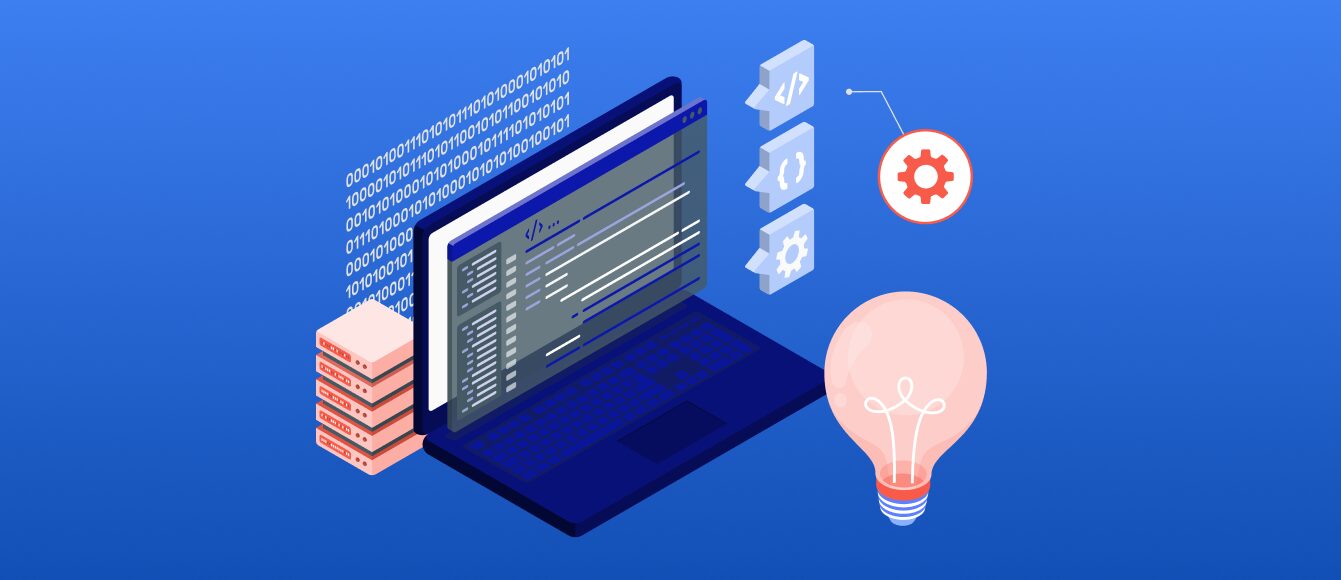

1. The Power and Limits of Today’s LLMs

1.1 What an LLM Do Well

LLMs excel at pattern recognition within language. By training on huge datasets (often terabytes of text), they learn to predict the next word in a sequence with remarkable fluency. This ability enables them to:

- Generate content quickly: Draft emails, summarize documents, create marketing copy, or produce boilerplate code.

- Answer open-ended questions: Thanks to broad training data, LLMs handle a wide range of topics right out of the box.

- Perform semantic search and categorization: They can sift through large text corpora and produce concise, human-readable outputs.

When used on straightforward tasks with clear guardrails, LLMs dramatically reduce time and effort.

1.2 Core Limitations

1. Lack of Real-World Understanding and Hallucinations

Though highly coherent, LLMs do not genuinely “understand” meaning. They predict words based on statistical patterns without verifying factual accuracy. As a result, they sometimes produce “hallucinations”—confident-sounding yet incorrect or fully fabricated answers. In legal tests, state-of-the-art models provided false citations 69–88% of the time.

2. Static, Outdated Knowledge

Once trained, LLMs don’t automatically learn new information unless specifically retrained. They might reference stale data if something changes after their training cutoff. Real-time integration (e.g., database lookups) is typically needed to keep information current.

3. Bias and Ethical Concerns

LLMs learn from text data that can contain societal biases, stereotypes, or offensive content. These biases can appear in model outputs, undermining fairness and trust.

4. Poor Long-Term Reasoning and Memory

Most LLMs handle only a short “context window” of text, limiting their capacity for multi-step tasks or in-depth reasoning. They can produce logical-sounding but unreal solutions when asked to plan across multiple steps.

5. Opaque Internal Reasoning

Because they are built on billions of weighted neural connections, explaining why a model generated a specific response is challenging. This black-box nature complicates debugging, auditing, and user trust.

6. High Computational Requirements

Training and running large models can be prohibitively expensive. Only well-funded organizations or big tech firms can routinely develop frontier models.

7. Sparsity and Efficiency Challenges

Even with large-scale architectures, many parameters remain underutilized for specific tasks. Recent breakthroughs in Mixture-of-Experts (MoE) architectures (e.g., Mistral’s 8×7B model) selectively activate “experts,” reducing computational overhead while maintaining performance.

8. Alignment and Reinforcement Learning from Human Feedback (RLHF) 2.0

Current RLHF pipelines rely on extensive human labels. New techniques like Constitutional AI (Anthropic) and Direct Preference Optimization (DPO) aim to refine model behavior with fewer human annotations—addressing bias and safety concerns more dynamically.

9. Catastrophic Forgetting

When fine-tuned on new data, LLMs can ‘forget’ previously learned information. Techniques such as elastic weight consolidation and other continual-learning frameworks are under active research to preserve older knowledge while incorporating new.

Despite these weaknesses, LLMs can still offer immense value if deployed wisely. Enterprises should pair LLMs with oversight, domain constraints, and a validation pipeline—especially in high-stakes scenarios (healthcare, legal advice, finance).

1.3 LLM Guide on Architectures and Technical Innovations

While parameter scaling has driven impressive leaps in performance, forward-thinking research emphasizes new architectures and efficiency methods:

- Mixture-of-Experts (MoE) Models

Models like Mixtral 8×7B (inspired by Mistral) and Google’s Gemini Ultra activate specialized subnetworks (“experts”) dynamically, providing GPT-4-level performance with lower computational cost. - Transformer Variants (e.g., Mamba, RetNet)

These innovations tackle the quadratic attention complexity problem by using state-space models or more recurrent designs, enabling longer context windows without prohibitive computing overhead. - Parameter-Efficient Fine-Tuning

Techniques such as LoRA (Low-Rank Adaptation) and QLoRA have democratized model adaptation. They allow domain-specific customizations with minimal extra parameters, an approach critical for enterprises needing to integrate proprietary knowledge. - Advanced RLHF Approaches

Constitutional AI and Direct Preference Optimization (DPO) refine model alignment with fewer costly human labels. By iterating on feedback loops more intelligently, organizations can better address biases and safety.

These architectural shifts complement mere scaling and can reduce costs while improving adaptability—an attractive proposition for any organization seeking cutting-edge yet efficient LLM deployments.

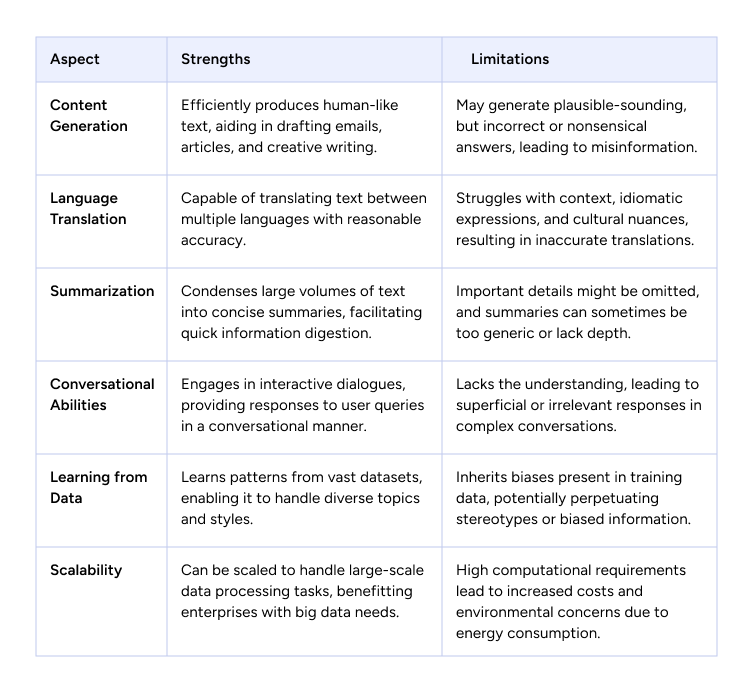

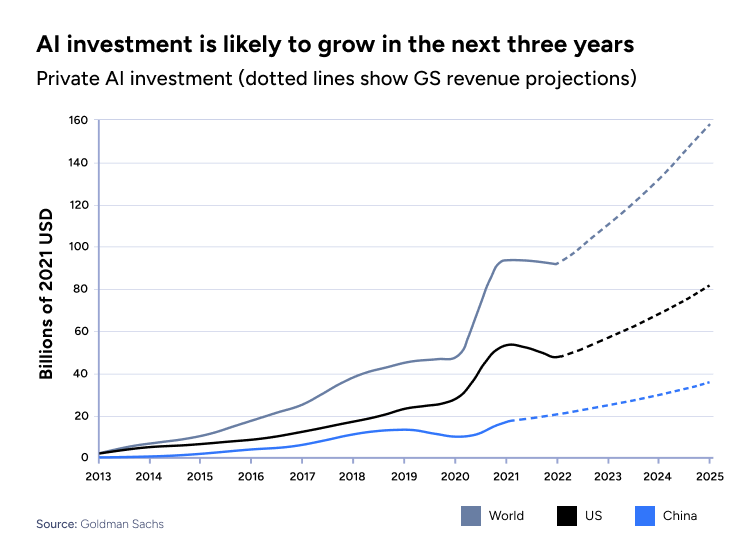

2. A Guide to LLM and Generative AI Investment Landscape

2.1 Surging Global Funding

Generative AI has unleashed a frenzy of investment. According to Our World in Data, funding for genAI startups jumped nearly nine-fold in 2023, surpassing $22 billion. By early 2024, global VC funding for AI reached $45 billion, reflecting sustained investor enthusiasm—despite broader market headwinds.

Why such exuberance? Analysts see LLMs as a transformative platform technology across industries, comparable to the early internet or smartphones. McKinsey & Company estimates that applying generative AI in various sectors could produce $2.6–$4.4 trillion in annual economic impact. Market forecasts project the global AI industry could exceed $1 trillion within the next decade.

The chart below, sourced from Goldman Sachs Research and the Stanford Institute for Human-Centered Artificial Intelligence, illustrates the sharp rise in private AI investments over the past decade. It highlights significant growth in global AI funding, with the United States leading in investment volume, followed by China.

The dotted lines indicate Goldman Sachs' revenue projections for AI-driven cloud services, including Microsoft Azure, NVIDIA, Google Cloud, and Amazon Web Services (AWS), forecasting a continued upward trend through 2025 and beyond. This data underscores the increasing role of AI in shaping global economies and the strategic investments being made to drive innovation and competition in the AI sector.

2.2 United States, European Union, and China

1. United States

- Private Sector Dominance: The U.S. leads global AI investment, reaching $67.2 billion in 2023—nearly 8.7× more than China, the next largest investor. Tech giants like Google, Microsoft, Meta, and Amazon invest billions in R&D. Venture capital also pours into AI startups (e.g., OpenAI, Anthropic, Hugging Face, Inflection AI).

- Government Support: Federal AI spending hit $3.3B in 2022, with initiatives such as the National AI Initiative and the CHIPS and Science Act (Feds Spent $3B on AI Last Year). Despite this growth, the vast majority of U.S. AI funding remains private.

2. European Union

- Public-Private Collaboration: EU private-sector AI funding trails the U.S., but the European Commission channels ~€1 billion/yr into AI via Horizon Europe and Digital Europe programs.

- Mobilizing Large Funds: The EU is setting up a plan to mobilize €200 billion (~$207B) in combined public-private AI investments, including building AI “gigafactories” and supercomputing infrastructure. Member states like France and Germany have also announced multi-billion-euro AI strategies to close the gap with the U.S. and China.

3. China

- State-Driven Model: China’s central and local governments inject large sums into AI, guided by the National Venture Capital Guidance Fund, aimed at mobilizing approximately 1 trillion yuan (~$138 billion) from social capital to support technology startups in the AI sector.

- Corporate Giants and Startups: Tech titans Baidu, Alibaba, Tencent, Huawei, ByteDance each invest heavily in AI R&D. Chinese AI startups secured about $12B in private funding in 2022, second to the U.S. Even with some regulatory crackdowns on tech, the post-ChatGPT AI boom ignited major venture rounds for companies like Zhipu AI, MiniMax, and Baichuan.

2.3 Notable Deals and Acquisitions

- Microsoft & OpenAI (USA): Microsoft’s multi-year, multi-billion-dollar partnership with OpenAI—totaling over $10B—has been pivotal. Microsoft gains preferential access to frontier models (like GPT-4) to integrate into Bing, GitHub Copilot, and Office 365 Copilot.

- Alibaba & Moonshot AI (China): In early 2024, Alibaba led a $1B funding round in Moonshot AI, a nascent startup developing advanced LLM architectures. Other investors included Sequoia Capital China (HongShan), highlighting China’s AI arms race.

3. How LLMs Are Transforming Key Industries

3.1 Healthcare

- Clinical Summaries and EHR Parsing: Hospitals deploy LLM-powered software to condense complex patient notes into concise narratives—improving care coordination and saving physicians time.

- Diagnostic Assistance: Multimodal models combine language and image analysis to identify abnormalities in scans or X-rays. By grounding text analysis in real-world visuals, AI can offer more robust insights—one pharmaceutical company reported a 78% accuracy in predicting protein-drug interactions that previously required lengthy lab tests.

3.2 Financial Services

- Fraud Detection and Risk Analysis: Banks and insurers use LLMs to detect anomalies in vast transaction datasets, accelerating the discovery of fraud or market manipulation. Advanced AI-driven approaches, such as Generative AI for risk management, further enhance fraud detection by identifying complex patterns and mitigating risks in financial operations.

- Customer Support Chatbots: AI assistants address routine banking inquiries, reducing human agent workloads. LLMs also summarize analyst reports, helping wealth managers track market events more efficiently.

3.3 Manufacturing & Supply Chain

AI in smart manufacturing is transforming industrial operations by improving efficiency and automation.

- Predictive Maintenance: LLMs parse sensor data and technician logs to forecast equipment failures before they occur, minimizing downtime.

- Quality Control: Natural language systems integrate with vision models to detect defects on assembly lines. This synergy can cut inspection times by up to 50% in certain industrial contexts.

3.4 Niche Industry Applications

- Climate Tech: Projects like ClimateBERT and CarbonPlan use LLMs to parse large sets of ESG reports or identify optimal carbon capture strategies. Some organizations report up to a 30% improvement in analyzing environmental disclosures for compliance and investment decisions.

- Material Science and Drug Discovery: Collaborations between Microsoft Azure Quantum and leading research labs have leveraged generative molecular design to accelerate drug discovery. Early trials suggest a reduction of months in the screening cycle for viable compounds.

- Space Exploration: NASA experiments with LLMs for autonomous rover communication and anomaly detection on deep-space missions. By synthesizing sensor data with textual mission logs, these models aim to reduce costly oversight from Earth-based teams.

3.5 Enterprise LLM Orchestration

Beyond single-model deployments, sophisticated organizations now implement “model orchestration” or “model mesh” frameworks:

- Task-Based Routing: Systems dynamically select the best model (open-source or proprietary) based on performance, cost, or security requirements.

- Adaptive RAG Pipelines: Retrieval-augmented generation can be toggled on or off per query type, ensuring real-time accuracy where needed.

- Unified Evaluation Metrics: Tools monitor latency, quality, and compliance across multiple models in production. As a result, some enterprises see 30–60% reductions in AI infrastructure costs while maintaining or improving performance.

Proper implementation and orchestration are essential for companies to scale AI efficiently while maintaining cost-effectiveness and compliance. A structured LLM Guide helps businesses avoid operational inefficiencies and maximize the value of AI investments. More insights on best practices can be found in this guide to AI transformation, with Consultport providing expertise in AI-driven innovation.

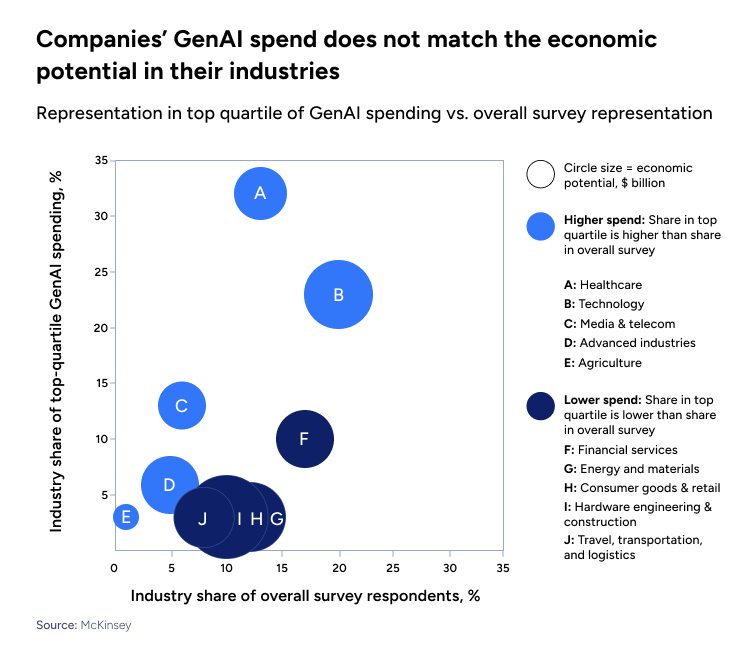

3.6 Companies’ Gen AI Spending vs. Economic Potential by Industry

This visualization from McKinsey & Company shows that industries with high generative AI (Gen AI) economic potential (depicted by larger circle sizes) do not always invest proportionally. The vertical axis reflects the industry’s share of top-quartile Gen AI spending, while the horizontal axis shows each industry’s share of total survey respondents.

- Blue circles represent industries with a higher share of Gen AI spending than their overall representation would suggest—e.g., Healthcare and Technology.

- Black circles indicate industries spending less on Gen AI than one might expect from their presence in the survey—e.g., Financial Services and Consumer Goods.

The key takeaway: while some sectors (like Healthcare and Technology) appear to be “leaning in” to Gen AI, others with substantial potential may be underinvesting. This underscores the importance of aligning AI budgets with a realistic assessment of an industry’s economic upside.

Conclusion

Large Language Models’ rapid rise has demonstrated their power to generate human-like content, facilitate content creation, and improve productivity across various industries. Yet, as seen in the sections above, these models also exhibit notable shortcomings—hallucinations, bias, lack of real-world understanding—that limit their applicability in high-stakes scenarios.

Business leaders should remain aware of these limitations while harnessing LLMs for immediate benefits in customer service, content generation, or data analysis. In Part 2, we will explore how next-generation AI strategies and emerging architectures—such as retrieval-augmented generation, hybrid systems, and more—are shaping the future of intelligent automation and decision-making.

on a weekly basis.